The abilities of deep learning are fascinating, just as this Paschke arch CC by David DeHetre

One of the successful insights to training neural networks has been the rectified linear unit, or short the ReLU, as a fast alternative to the traditional activation functions such as the sigmoid or the tanh. One of the major advantages of the simle ReLu is that it does not saturate at the upper end, thus the network is able to distinguish a poor answer from a really poor answer and correct accordingly.

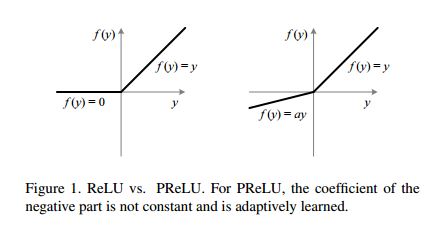

A schematic of the PReLU. The LReLU has the same schematic with the only difference being the α being a constant. Curtesy PReLU article.

A modification to the ReLU, the Leaky ReLU, that would not saturate in the opposite direction has been tested but did not help. Interestingly in a recent paper by the Microsoft© deep learning team, He et al. revisited the subject and introduced a Parametric ReLU, the PReLU, achieving superhuman performance on the imagenet. The PReLU learns the parameter α (alpha) and adjusts it through basic gradient descent.

In this tutorial I will benchmark a few different implementations of the ReLU and PReLU together with Theano. The benchmark test will be on the MNIST database, mostly for convenience. Continue reading