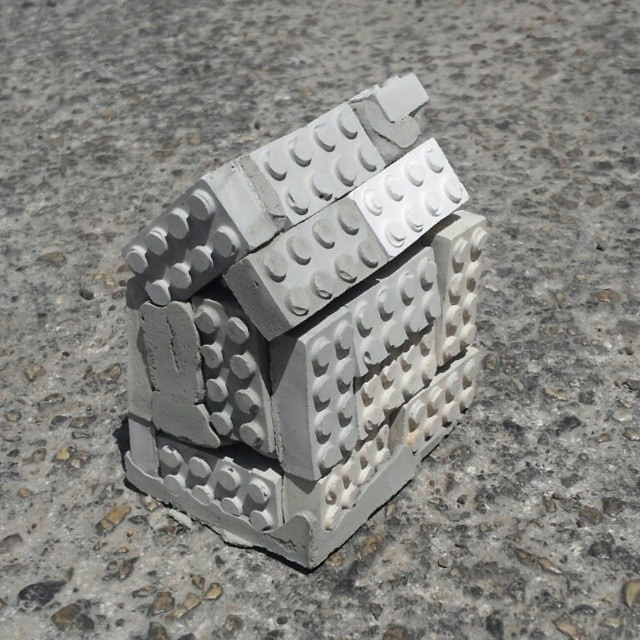

[](http://gforge.se/wp-content/uploads/2016/07/Lego-house-concrete.jpg) A solid concrete foundation is always important. The image is cc by[

Sharon Pazner

](https://flic.kr/p/nSNQzw)

Handling [tabular data](https://en.wikipedia.org/wiki/Table_(information)) is generally at the heart of most research projects. As I started exploring [Torch](http://torch.ch/) that uses the [Lua](https://www.lua.org/) language for [deep learning](https://en.wikipedia.org/wiki/Deep_learning) I was surprised that there was no package that would correspond to the functionality available in R’s [data.frame](https://stat.ethz.ch/R-manual/R-devel/library/base/html/data.frame.html). After some searching I found Alex Mili’s [torch-dataframe](https://github.com/AlexMili/torch-dataframe) package that I decided to update to my needs. We have during the past few months been developing the package and it has now made it onto the Torch [cheat sheet](https://github.com/torch/torch7/wiki/Cheatsheet#data-formats) (partly the reason for the posting scarcity lately). This series of posts provide a short introduction to the package (version 1.5) and examples of how to implement basic networks in Torch. Continue reading →